If you’re just now joining us we’ve been talking about creating huge Madlib Sites and powerful Link Laundering Sites. So we’ve built some massive sites with some serious ranking power. However now we’re stuck with the problem of getting these puppies indexed quickly and thoroughly. If you’re anything like the average webmaster you’re probably not used to dealing with getting a 20k+ page site fully indexed. It’s easier than it sounds, you just got to pay attention and do it right and most importantly follow my disclaimer. These tips are only for sites in the 20k-200k page range. Anything less or more requires totally different strategies. Lets begin by setting some goals for ourselves.

Crawling Goals

There are two very important aspects of getting your sites indexed right. First is coverage.

Coverage- Getting the spiders to the right areas of your site. I call these areas the “joints.” Joints are pages on the site where many other important landing pages are connected to, or found through, yet are buried deeply into the site. For instance if you have a directory style listing where you have the links at the bottom of the results saying “Page 1, 2, 3, 4..etc.” That would be considered a joint page. It has no real value other than listing more pages within the site. They are important because they allow the spiders and visitors to find those landing pages even though they hold no real SEO value themselves. If you have a very large site it is of the utmost importance to get any joints on your site indexed first because the other pages will naturally follow.

The second important factor is the sheer volume of spider visits.

Crawl Volume- This is the science of drawing as much spider visits to your site as possible. Volume is what will get the most pages indexed. Accuracy with the coverage is what will keep the spiders on track instead of them just hitting your main page hundreds of times a day and never following the rest of the site.

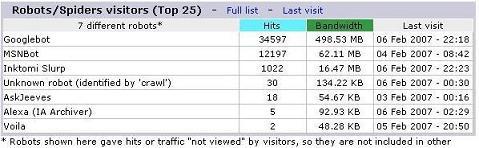

This screenshot is from a new Madlib site of mine that is only about 10 days old with very few inbound links. Its only showing the crawlstats from Feb. 1st-6th(today). As you can see thats over 5,700 hits/day from Google followed shortly by MSN and Yahoo. Its also worth noting that the SITE: command is as equally impressive for such an infant site and is in the low xx,xxx range. So if you think that taking the time to develop a perfect mixture of Coverage and Spider Volume isn’t worth the hassle, than the best of luck to you in this industry. For the rest of us lets learn how it is done.

Like I said this is much easier than it sounds, we’re going to start off with a very basic tip and move on to a tad more advanced method and eventually end on a very advanced technique I call Rollover Sites. I’m going to leave you to choose your own level of involvement here. Feel free to follow along to the point of getting uncomfortable. There’s no need to fry braincells trying to follow techniques that you are not ready for. Not using these tips by no means equals a failure and some of these tips will require some technical know-how. So at least be ready for them.

Landing Page Inner linking

This is the most basic of the steps. Lets refer back to the dating site example in the Madlib Sites post. Each landing page is an individual city. Each landing page suggests dating in the cities nearby. The easiest way to do this is to look for zipcodes that are the closest number to the current match. Another would be grab the row id’s from the entries before and after the current row id. This causes the crawlers to move outward from each individual landing page until they reach every single landing page. Proper inner linking among landing pages should be common sense for anyone with experience so I’ll move on. Just be sure to remember to include them because they play a very important roll in getting your site properly indexed.

Reversed and Rolling Sitemaps

By now you’ve probably put up a simple sitemap on your site and linked to it on every page within your template. You’ve figured out very quickly that a big ass site = a big ass sitemap. It is common belief that the search engines treat a page that is an apparent site map differently in the number of links they are willing to follow than other pages, but when you’re dealing with a 20,000+ page site thats no reason to view the sitemaps indexing power any differently than any other page. Assume the bots will naturally follow only so many links on a given page. So its best to optimize your sitemap with that reasoning in mind. If you have a normal 1-5,000 page site its perfectly fine to have a small sitemap that starts at the beginning and finishes with a link to the last page in the database. However when you got a very large site like a Madlib site might produce it becomes a foolish waste of time. Your main page naturally links to the landing pages with low row id’s in the database. So they are the most apt to get crawled first. Why waste a sitemap that’s just going to get those crawled first as well. A good idea is to reverse the sitemap by changing your ORDER BY ‘id’ into ORDER BY ‘id’ DESC (descending meaning the last pages show up first and the first pages show up last). This makes the pages that naturally show up last to appear first in the sitemap so they will get prime attention. This will cause the crawlers to index the frontal pages of your site about the same time they index the deeply linked pages of your site(the last pages). If your inner linking is setup right it will cause them to work there way from the front and back of the site inward simultaneously until they reach the landing pages in the middle. Which is much more efficient than working from the front to the back in a linear fashion. An even better method is to create a rolling sitemap. For instance if you have a 30,000 page site have it grab entries 30,000-1 for the first week then 25,000-1:30,000-25,001 for the second week. Then the third week would be pages 20,000-1:30,30,000-20,001. Then repeat so on and so forth eventually pushing each 5,000 page chunk to the top of the list while keeping the entire list intact and static. This will cause the crawlers to eventually work there way from multiple entry points outward and inward at the same time. You can see why the rolling sitemap is the obvious choice for the pro wanting some serious efficiency from the present Crawl Volume.

Deep Linking

Deep linking from outside sites is probably the biggest factor in producing high Crawl Volume. A close second would be using the above steps to show the crawlers the vasts amounts of content they are missing. The most efficient way you can get your massive sites indexed is to generate as much outside links as possible directly to the sites’ joint pages. Be sure to link to the joint pages from your more established sites as well as the main page. There are some awesome ways to get a ton of deep links to your sites but I’m going to save them for another post. ![]()

Rollover Sites

This is where it gets really cool. A rollover site is a specially designed site that grabs content from your other sites, gets its own pages indexed and then rolls over and the pages die to help the pages for your real site get indexed. Creating a good Rollover Site is easy, it just takes a bit of coding knowledge. First you create a mainpage that links to 50-100 subpages. Each subpage is populated from data from your large sites’ databases that you are wanting to get indexed (Madlib sites for instance). Then you focus some link energy from your Link Laundering Sites and get the main page indexed and crawled as often as possible. What this will do is, create a site that is small and gets indexed very easily and quickly. Then you will create a daily cronjob that will pull the Google, Yahoo, and MSN APIs using the SITE: command. Parse for all the results from engines and compare them with the current list of pages the site has. Whenever a page of the site (the subpages that use the content of your large sites excluding the main page) gets indexed in all three engines have the script remove the page and replace it with a permanent 301 redirect to the target landing page of the large site. Then you mark it in the database as “indexed.” This is best accomplished by adding another boolean column to your database called “indexed.” Then whenever it is valued at “true” your Rollover Sites ignore it and move on to the next entry to create their subpages. It’s the automation that holds the beauty of this technique. Each subpage gets indexed very quickly and then when it redirects to the big site’s landing page that page gets indexed instantly. Your Rollover Sites keep their constant count of subpages and just keep rolling them over and creating new ones until all of your large site’s pages are indexed. I can’t even describe the amazing results this produces. You can have absolutely no inbound links to a site and create both huge crawl volumes and Crawl Coverage from just a few Rollover Sites in your arsenal. Right when you were starting to think Link Laundering Sites and Money Sites were all there was huh ![]()

As you are probably imagining I could easily write a whole E-book on just this one post. When you’re dealing with huge sites like Madlib sites there is a ton of possibilities to get them indexed fully and quickly. However if you focus on just these 4 tips and give them the attention they deserve there really is no need to ever worry about how your going to get your 100,000+ page site indexed. A couple people have already asked me if I think growing the sites slowly and naturally is better than producing the entire site at once. At this point I not only don’t care, but I have lost all fear of producing huge sites and presenting them to the engines in their entirety. I have also seen very little downsides to it, and definitely not enough to justify putting in the extra work to grow the sites slowly. If you’re doing your indexing strategies right you should have no fear of the big sites either.

With all the wonderland of guidance Blue Hat provides, any suggestions on hiring programmers? Or project management geared toward ever greater levels of automation?

At the levels you are discussing, working towards not reinventing the wheel would seem to be a big priority in getting things to scale properly.

Also, I appreciate your inviting other viewpoints. My personal one is it’s a poor world where people are intolerant, avoid helping one another, put all their attention onto money, or look for loopholes instead of making their corner of the world a better place.

You’re showing a lot of tolerance in making your corner a better place, and while I don’t share some of your goals, it’s a real pleasure to have the privilege of your guidance through this blog!

Thank you for this great information, you write very

well which i like very much. I really impressed by your post.

Ma j bt vec ga imam a opet isto radim

Thanks for posting! I really like what you’ve acquired here;

You should keep it up forever! Best of luck

Thank you!

man, you have so many good ideas that ive never heard anywhere else. Keep up the good posts , i love reading your blog

Quit was a great tool

Too bad it’s gone ://

As I understand your rolling sitemap, you keep a full 30.000k links in your sitemap - the same links always - and just switch the order of them from time to time.

Is it very important to keep the map ’static’ e.g. to have all the links in the map always?

I was thinking about just putting 2-3k in my sitemap at a time and then replacing them with a new batch now and then. Would that work?

Great question.

I think having a static sitemap is very important. Infact keep it as static as possible. Like I mentioned in the article only change it once a week or so. As far as having ALL the links on the sitemap at all times, it is important for sites in this size range. The technique you’re touching on is a chunked sitemap. Its a sitemap technique for sites that are over the 200k page mark. I won’t get into too much detail on it but basically what it boils down to is having multiple sitemaps on the same site, each one rolling at different intervals. One being a daily sitemap, one being a weekly sitemap and the third being a monthly sitemap. Each one is larger then the one before it. I’ll eventually explain the whole thing in a post. For now don’t worry about creating a dynamic feature on your sitemaps because its pretty useless without creating a chunked sitemap system. but we’ll dive into that some other time.

Great post, and I really appreciate the level of detail you go into.

I do have a question, though, about churning out so many pages in such a short time. I’ve had a couple of sites nuked by Google in the past (PR plummets from 4 to 0 and/or every page kicked to supplemental results) and both were existing sites with 100 or so pages that were fully indexed and ranking well, that I expanded using automatically generated to +20,000 pages, and were nuked by Google shortly thereafter.

There seems to be some evidence out there that Google doesn’t take kindly to huge sites with tens of thousands of pages suddenly springing up overnight, as that’s not the way big sites have traditionally evolved over time, growing slowly and steadily. Explosive growth like that is also a trivial thing for Google to track, as it’s not hard to identify at all.

Do you really think there’s no danger in creating huge sites like you outline and publishing all of the pages immmediately?

This depends on how you do it.

When starting off (just to get the pages indexed) I wouldn’t include any advertisements, just the content / verbage. The big thing here is to have each page NOT LOOK like a spam site. But if you have 50,000+ pages of quality content, then you have nothing to fear.

Quality Content examples -> Baseball card stats, ebay items, amazon products, list is endless.

Then after your fully indexed (depending on size of site can take 2 - 4 weeks for 75k+ pages, then you add your advertisements, and walla instant money.

Rob is right. It’s the content that burns your site not the growth speed.

Many thanks for the advice.

Could you clarify the bit about the ads being what potentially gets you in trouble with search engines?

I realize that if you launch the site with both ads and content, that the ads will increase the percentage of non-unique content from page to page, and that the ads show up as elements on every single page. I also get the concept of the pages needing to contain quality, unique content, and understand how publishing the site without ads can help it get indexed and not trigger any sort of penalty.

But if the ads are what triggers some sort of penalty, won’t all of your pages get booted on the next crawl after you add the ads? Or do you treat these as throw-away sites, which you get indexed (without ads), then slap in some ads, make some money, and resign yourself to the pages dropping out of results over time, as the pages are crawled again, ads are discovered, and a penalty is triggered?

Thanks for any advice.

Ok, here is the problem:

You will want to have your site indexed in Google as most of the traffic comes from it.

Adsense is the most profitable money-maker on this kind of site, but is the best spy from google to catch you if you go grey/blackhat.

Obviously there’s a conflict : Google and Adsense are the same system.

I once tried to create pages with high paying keywords, to get adsense serving high $$$ ads. Within 12 hours, I had some real people (not bot) from google visiting the pages, and my site as well. Guess what? I got my site banned from google search results and the ads money dropped to almost zero!

So to be safe, if you manage to make some 20K+ pages site look genuine, no worry, but if it is some simple madlib, you might prefer to get affiliates $$$ (the example from Eli is really good BTW).

Remember that if you get a few sites banned and you used adsense on them, your unique adsense publisher ID is a very big footprint to let google find any other website you create.

I have note this

Yet another great idea from BlueHat. Keep up the excellent work, sir!

Ben

http://idleprofit.com

That’s awesome. I was wondering how to get a huge site like that indexed so fast. Can’t wait to read more about these kind of site strategies.

The rollover site idea has worked wonders for me in the past when trying to correct supplemental issues for large dynamic client sites. I use other techniques with my “madlib” sites but will be adding this to my arsenal.

Superb post! I’ll try this methods to index better some of my sites. Thanks for this blog eli.

Another great post Eli thanks for sharing

With your Madlib site did you use a brand new domain name or was it an already established domain with page rank?

I totally agree with you phil

This is awesome

thanks,

I know i only listed one as an example above, but that one was actually part of a medium sized network of madlib sites. They all had about the same crawlstats so i just grabbed a random one. They were all brand new domains.

Power Indexing Tips

Eli over at Bluehatseo explains how to Power Index big sites with more than 20,000 pages. Interesting concept

Really great idea, cant wait to try it!

Eli

Once again an awesome post - problem is everytime I read your blog more ideas are presented and I just can’t keep up with it man!!!

However dont for one moment stop!

A sideline question… When you’re rolling out mega sites, do you stagger the growth? I don’t have the experience to know if it’s true, but I hear about the need to avoid mega jumps in website sizes (not to raise any flags)…

Thanks

Bloop

Eli,

Nice tips.

About the last method, it’s still alot of link building work to get the rollover sites indexed. What would be the benefits of getting those indexed and 301 all pages to the “real” site than work on the “real” site’s link building directly?

Best Regards

VJ

Great question!

Roll over sites only need to be built once. They can be used over and over to get all your future projects indexed. They are quite a bit of work, but they don’t just serve a one time purpose and your done with them. If you are the type that is constantly building new projects they become invaluable and well worth the effort.

Thank you for awnser,

However, once you 301 all pages to the new site the rollover site will automaticly get de-indexed by the search engines no?

A 301 basicly means that you have moved the document elsewhere, why would the SE keep them in the index?

You also mention that it will get about anything indexed “instantly” which is surprising since it takes a few weeks to the SE to recognize the 301. What do you have to say about that?

Thank you for your time!

VJ

I’m sorry, I realize some people learn best by getting a concept of things and then asking questions until they understand it, but I can’t answer that question any better than the actual post does. If you just skimmed the post and started asking questions, I’m afraid this is the wrong venue. You’re going to actually have to read it, and then if you still don’t understand ask someone else who read it and maybe they can explain it more clearly than I did.

I understand your point,

Thank you anyway

Thanks for the question though. I think you may not be alone in that some people may assume that the main page will redirect as well. The main page stays the same it just links to the subpages until they have been indexed and replaced by the redirects. Likewise, the all the subpages don’t get redirected at once. They only get redirected after they have been indexed. At which time they get replaced by a new target and new page.

Oh I get it now!

Time to code…

thx Eli!

Ok, this might be far too basic for some people, but I thought I’d spit it out there anyway. I was thinking about random content, and about how Google must have some sort of means in place to differentiate btw pages that are updated often, and pages that are randomly generated. So I figured what I’d do for a particular site was go completely psuedo random, where each page could be assigned a static random number, but that would be used in conjunction with an offset of day + month to pull content from a db. But I’m with some of the others - I’ll get to the rollover sites once I’ve completed my other assignments Thanks again for all the ideas Eli.

Thanks again for all the ideas Eli.

Trying the tool on a couple of sites. I’ll post the results in the next days.

I submitted a page at 11:20pm and had it indexed by 11:27pm of the same day. I went to log the time I did it so I could report the visit and when I refreshed the page, Google had already visited.

Excellent work Eli!

Correction to my previous post. It wasn’t indexed, but visited within 7 minutes.

Thanks for the article. I have a question for the community. You talk about switching the order of your pages on the site map for the crawler to visit different pages. What if you had one site map that contained all 30k pages. What if you also made SITE MAP A which linked to SITE MAP B , C , D ETC.. SITE MAP B listed the first 1k pages. SITE MAP C listed the next 1k pages etc. If you then promoted SITE MAP A. The crawler would visit the first site map then the smaller ones and then your pages. I guess the question to be answered to make this work properly would be how many page jumps would the crawler make before saying enough. If it was limitless you could keep the crawler jumping from page to page in many different directions for a long time with this stratagy.

it seems that we have a new rising star here. this time - a blue hat star! Bravo!

Thanks for the info, is gonna come in handy with the new site I am currently making!

Yes this information is awesome and will really help for SEO beginners to index theri site very quickly even i have checked one of the tool quick indexed tools and the site get indexed in 2 days….

I’ve just finished my first madlib and started making a rollover site for it, then realized there was an easier way to do it.

You need to get a second domain and point it at the same place as your madlib site (so you can view the site through both domains - your original one and the new one).

Then modify your index page. It should check if http_host is the original domain and if it is, continue as normal, otherwise it’s the new domain so output links to 50-100 subpages.

Now modify the code which displays your subpages - check http_host, if it’s the new domain then work out whether the page has been indexed or not and if it has, do a 301, otherwise display the page as normal.

Finally do the cron to check which pages have been indexed.

The downside to doing things this way is that your rollover sites aren’t re-usable, but on the plus side it requires virtually no effort at all. Most of the code required is for the cron to do the site: command, and that is re-usable.

I saw a Matty C vid recently in which he stated, don’t worry about launching big sites unless they’re over a million pages…

Hey guys. Would you recommend putting a roll over on it’s own domain or would a sub domain suffice?

Eli,

For the rolling sitemap you’re still referring to a single page sitemap, correct?

Eli - When creating rollover sites, do you leave links to the rollover site’s pages that have already been indexed and 301′d on the homepage (or elsewhere on the site)? Otherwise, wouldn’t the search engines delist the pages that don’t have any links from elsewhere?

Very interesting stuff. I’m currently coding a rollover site in PHP for my own seo empire. Should be massive !

Creative new technique as always

Thanks for info.

Great ideas. I can´t waiting for try this on a site

This information is very awesome…

Great, thanks …I added you to my network and saved a couple of your bookmarks….thanks for information.

Isn’t there a tool to do this?

Merry Christmas/

No fear of the big sites.Very motivating…Thanks.I really appreciate the tips and guide .

Great, thanks. I’ll try this methods to index better some of my sites.

Merry Christmas

10 days for such Google hits, that’s a lot! I wonder when I’ll be so familiar with these tricks!

I’m assuming that it takes a while for a person to create an 20k + website of original content. Another method is every time a page is created is to social bookmark the page once it created. It will usually get indexed with in a day. It’s probably not the most efficient strategy but an effective one.

I think something even more important than power indexing is that you need to get your content indexed fast, I mean at least once in every 2 days. You know the buzz about Google Hot Trends nowadays, right? It’s the key to massive traffic!

Thanks …I added you to my network and saved a couple of your bookmarks….thanks for you information.

Jesus thats a lot of google hits. Great info thanks.

That’s a really good technique for indexing. Thanks!

Cool stuff. This is really powerful!

Great stuff here, but stop telling the world all our secrets

It’s great to know how powerful indexing is.

Very good indexing tips indeed.

Really gold topic. But is it possible to get banned by Google for redirecting?

Very useful if you could package all your scripts into a service.

I wish I was able to use your blue hat SEO tool, before that jerk got it shut down.

Я практически случайно зашел на этот блог, но вот обосновался тут надолго. Задержался, потому что все очень интересно. Обязательно скажу о вас всем своим приятелям.

Very useful information…thanks for sharing…

I didn’t know Indexing could be so powerful.

i thought that i know everything about indexing, but you proved me wrong

Appreciate the info, I am interested in hearing more about rss feeds and is the feed link a “nofollow” or “dofollow”? At West Coast Vinyl, we’ve been looking into this, any input would be great. thanks!

Really very informative post. I first time saw such type of search engine stats. Because i don’t have enough knowledge related to all search engines algorithm. Thanks for sharing with me buddy..

Google’s stats looking impressive. Indexing is really essential and it helps website to cache all its pages easily. Really nice indexing tips.

Thanks for sharing such a informative post with me. I bookmarked you web page and will see it deeply when i get free from busy schedule..

You shared with me such confidential info. I’m very thankful to you for it. you describe all search engine stats superbly..

Latest ideas are defined in this blog, therefore, i want to be added to this unique blog.

Thanks for the great blog, definitely bookmarked for future reference

Eli, one thing I wanted to know/ask, what will be the case if a site has more than 3 million pages? Does the above trick mentioned above will solve the problem for this as well?

There are two very important aspects of getting your sites indexed right. First is coverage.

Great Site - ive bookmarked it for later use when the site has so many pages!

I cant believe how much useful content I found here.

Being an experienced person in SEO even i benefited from this article. Keep working. Thanks.:)

Thanks for the great post. Very interesting stuff!!!

I was thinking about just putting 2-3k in my sitemap at a time and then replacing them with a new batch now and then. Would that work?

I do agree with you on this article, although I read from another blog something different from what you are saying.

Anyway thank you for your review.

All the best!

Thanks for useful post. I will use some of the indexing tips and I`m curious about the results.

thanx for topics

hmm interesting ..i will give it a try Tatuaggi piede

thanks for giving me such useful and great technique,this will help me in future.

thanks again!

This site is a goldmine. So much useful info, I can even put up with the sexist jokes:)

Great idea. Thanks for sharing.

Awesome post, thanks as always. I emailed this to a friend.

This is a quality blog,thank you for passing on your knowledge with us all.

Great article for one of the fundamentals of SEO.

Yeah but this no longer works under Caffeine.

I saw a Matty C vid recently in which he stated, don’t worry about launching big sites unless they’re over a million pages…

Yeah but this no longer works under Caffeine.

Great post - even if the math doesn’t add up!

Thank you for teaching us on how to index sites quickly and thoroughly. I will try these techniques or ways on my future sites!

This post about Rolling Sitemaps are the first thing that I’ve ever heard about indexing your sites. It’s really a great concept. But how did you add 100,000 to your website with just a single entry? Did you use a tool? Is there a wordpress plugin that does the rolling sitemap? Thanks!

When you think of happy, unhappy or think of you when you smile in my mind wander

i feel so good

yeah, many indexed pages there are many chances for getting more visitors.

Thanks for the info, is gonna come in handy with the new site I am currently making!

Great article for one of the fundamentals of SEO.

I do agree with you on this article, although I read from another blog something different from what you are saying.

When I build links I try to do them very slowly to stay below the google radar. I would hate to get my sites banned from the SERPS.

That’s awesome. I was wondering how to get a huge site like that indexed so fast. Can’t wait to read more about these kind of site strategies.

Yes this information is awesome and will really help for SEO beginners to index theri site very quickly even i have checked one of the tool quick indexed tools and the site get indexed in 2 days….

Thanks for the info, is gonna come in handy with the new site I am currently making!

I have been marked with capital letters, the points are worth and the number of times that occur in the set of Scrabble tiles (for English), but I wonder if there is a more useful way to classify Wordnik here.

Great idea. Thanks for sharing!!!

That’s awesome. I was wondering how to get a huge site like that indexed so fast. Can’t wait to read more about these kind of advanced site strategies.

Great, thanks. I’ll try this methods to index better some of my sites.

Thanks for this great blog and this helpfull post.

That’s awesome. I was wondering how to get a huge site like that indexed so fast. Can’t wait to read more about these kind of site strategies.

A very interesting post I stumbled across. Will certainly try some of these techniques on my web sites in future.

Glen

Very good post! I will try this methods to get higher ranking in the search engines. Thanks for the article.

Wow this is valuable SEO tips. I must admit that I never knew about rollover sites. It’s something that I may or may not try, but it’s definitely good to know some new techniques. Thanks.

I was thinking about just putting 2-3k in my sitemap at a time and then replacing them with a new batch now and then. Would that work?

I agree with you idea, provide us more advance seo technique my friend.

This is my new favorite blog… for real. So many valuable SEO tips

That’s awesome. I was wondering how to get a huge site like that indexed so fast. Can’t wait to read more about these kind of site strategies.

Your ideas are really great! Thanks for sharing them with us!

I agree with you idea, provide us more advance seo technique my friend..

4Nice one, there is actually some good points on this blog some of my readers may find this useful, I must send a link, many thanks.

2I believe this really is excellent information. Most of men and women will concur with you and I ought to thank you about it.

hi your ideas are so different and great

thanks for sharing it.

Hi,

very good posting. My english is not so good, but even I understood everything. Keep going and write always easy like that please.

Thanks,

Egon

A very interesting post I stumbled across. Will certainly try some of these techniques on my web sites in future.

loving this

very good article

many thanks

This is my first time i visit here. I found so many entertaining stuff in your blog, especially its discussion. From the tons of comments on your articles, I guess I am not the only one having all the leisure here! Keep up the good work.

ANAM

Brazos Lofts for Sale

thanks for the articlo

tim

Good work, Bluehat!

love to see more comments here,really nice topic to discuss.

On the last pic - blue window shot it may work better with the right side cropped a bit to remove the the column edge but leave the shadow.

Mit meiner Homepage biete ich Rauchern und werdenden Nichtrauchern das, was mir half meine Gesundheit und das gesamte soziale Umfeld enorm zu entlasten.

Warum? Weil ich weiß, dass es funktioniert, aber die meisten Raucher das E-Rauchen ungetestet abwerten oder von Abzockern abgeschreckt werden, ohne positive Ergebnisse.

Durch die elektrische Zigarette entsteht ein deutlich geringeres Gesundheitsrisiko.

Sie sind um die 80% günstiger als normale Zigaretten und sie dürfen überall dort geraucht werden,

wo für normale Raucher Rauchverbot herrscht.

Angesichts der derzeitigen Nichtraucherschutz-Diskussionen zweifellos immer beliebter.

Elektronische Zigaretten scheinen eine verblüffend einfache Lösung für all die derzeit diskutierten Probleme zu bieten:

Einerseits bieten sie Rauchern die Möglichkeit, ihren Schadstoffhaushalt drastisch zu reduzieren,

zum anderen werden Passiv-Raucher nicht mehr belästigt.

http://www.e-zigarette-preiswert.de/

Vom Nutzen und Funktion des E-Rauchens, E-Zigarette und E-Liquid, möchte ich nun anderen Rauchern berichten und die Möglichkeit bieten, alles was dazu benötigt wird zu fairen Preisen auf meiner Seite zu erstehen und vorab alle Fakten abrufen zu können.

Ein anschauliches Video ist neben Downloads und tiefgründigem Wissen eingebunden und direkt anschaubar.

Viele Grüße, e-zigarette-preisert.de

Extremely helpful! It is a great way of providing info and very nice article. This is really good and advanced techniques.

thanks for this intersting article.

Blue Hat SEO is top in almost every respect: The amount of (relevant) information base is large (more about that

also the Alexa ranking for the linking domain is given, underlining that it is in link building and to direct traffic, not just indirectly through better rankings), and the

Presentation is appealing. This is especially true for the additional information at the end, which are clearly presented as either a chart or as a ranking (eg anchor text)

All power to ya Eli.

I guess I’ll add you back to my daily bloglist. You deserve it my friend.

I’ve been meaning to start a video blog for some time now

keep it up

thanx

Dude you’re smart.

I would like to thnkx for the efforts you have put in writing this blog. I am hoping the same high-grade blog post from you in the future as well. In fact your creative writing skills have inspired me to get my own blog going now. Actually blogging is spreading its wings and growing quickly. Your write up is a good example.

it is really interesting; . I continuously

look for these kinds of blogs which give lot of information.

i get a lot of information from this.and wish to complete this job.

i read from net about sorts,teaching and other blogs.

I haven’t checked in here for some time because I thought it was getting boring, but the

These tips are awesome… I am SEO and I need these tips because these tips are very helpful for me… I am sharing this wonderful blog with my SEO partners!!!

Great idea. Thanks for sharing.

It is an awesome post

I was wondering how to get a huge site like that indexed so fast. Google dont like it when thay see it.

Excellent post, one of the few articles I’ve read today that said something unique ! One new subscriber here

Year its awesome

I would like to thank you for the efforts you have put in writing this blog. I am hoping the same high-grade blog post from you in the future as

I manage to include your blog among my reads every day. You have interesting entries that I look forward to. This is a nice web site.

Thats Great

Power indexing is a good one but I think since Panda the validity of this is now not useful at all.

wonderful. I think that your viewpoint is deep, it’s just well thought out and truly incredible to see someone who knows how to put these thoughts so well. Good job!

This article is GREAT it can be EXCELLENT JOB and what a great tool!

good website chat p7bk nice chat egypt girl free

Abe sale kamine.. tu nahi sudherega

Although this was a time of Moncler Winter Jackets – think Wall Street, “greed is good” and so on, one of the Moncler Coats Women was, of course, the oil industry, so this part of Moncler Jackets Men was indubitably where the money was. It was a decade dedicated to conspicuous consumption, Moncler Coats Women and branding yourself with designer labels. Moncler Women went from wanting to marry the millionaire to wanting to be the millionaire, and so shows such as Moncler Boots weren’t just television fiction, they reflected the attitude and aesthetic of the time, as well as the financial power wielded

Great Post Worth a Bookmark xD

Thank you very much for this article. This is a good way to attract more and more links.

Oh My GOD….. reay gr8

realy very Good article….

Godd Manclr…

heheh

Great post indeed, was a pleasure to read. Thanks a lot. znacenje snova

Some days i will be ceo on seo preneur and blogger seo.. Did u see. u see this path when we know what is google seo..Cause if that true, im be a seo trainer

nice tip, I’ve never thought to just rolling the urls into my sitemap. I’ll try.

Thanks for this article, very interesting.

That’s awesome. I was wondering how to get a huge site like that indexed so fast. Can’t wait to read more about these kind of advanced site strategies.

Helpfull Article, i do a lot of your Tip’s, NOW!

Thanks and have a nice Time

Great Inspirations for my Blog!

Thanks

inner linking is very crucial. do not forget to do it and just focus on building external linking.

king is very crucial. do not forget to do it and just focus on building external linking

The trick behind power indexing lies on the correct technique you need to use. Otherwise, it is just useless!

Eli, thank you for the info on Indexing, it helped me understand it way better!!

With all of the strategies out there for retirement, have you heard of indexing? This powerful strategy can be the key that you have been looking for.

nice tip, I’ve never thought to just rolling the urls into my sitemap. I’ll try.

he Power of Index Funds: Canada’s Best-Kept Investment Secret starts off by explaining what index funds are and how they work.

I found your website about a week ago and have been reading it. All I can say is THANK YOU! Like I say, I am new to all this but I hope to be able to contribute soon.

Rob is right. It’s the content that burns your site not the growth speed.

Superb post! I’ll try this methods to index better some of my sites. Thanks for this blog eli.

Ma j bt vec ga imam a opet isto radim

Also acquire page rank domains and host your sites on many different Ip address. you don’t want google to detect patterns so be careful with your crosslinking

quire page rank domains and host your sites on many different Ip

very useful information!

it is what I’m looking for!

Thanks for the info!

Regards,

CoolTips2u

I realize that if you launch the site with both ads and content, that the ads will increase the percentage of non-unique content from page to page, and that the ads show up as elements on every single page. I also get the concept of the pages needing to contain quality, unique content, and understand how publishing the site without ads can help it get indexed and not trigger any sort of penalty.

This is truly a great read for me. I have bookmarked it and I am looking forward to reading new articles.

Keep up the good work.

nice man

Great article

thanks

Nice Post. This post explains me very well.

gooooooood man ..!

thaaaaaaaaaaanks

indexing is such a great tool

i need to check out power indexing

Feel free to follow along to the point of getting uncomfortable. There’s no need to fry braincells trying to follow techniques that you are not ready for.

I’m impressed. Really educational and trustworthy weblog does precisely what it sets out to finish.

auto transport leads tips are only for sites in the 20k-200k page range. Anything less or more requires totally different strategies. Lets begin by setting some goals for ourselves.