This is a follow post to my 100’s Of Links/Hour Automated - Black Hole SEO post.

I’m going to concede on this one. I admittedly missed a few explanations of some fundamentals that I think left a lot of people out of all the fun. After reading a few comments, emails and inbound links (thanks Cucirca & YellowHouse for good measure) I realize that unless you already have adequate experience building RSS Scraper Sites than its very tough to fully understand my explanation of how to exploit them. So I’m going to do a complete re-explanation and keep it completely nontechnical. This post will become the post to explain how it works, and the other will be the one to explain how to do it. Fair enough? Good, lets get started with an explanation of exactly what a RSS scraper site is. So once again, this time with a MGD in my hand, cheers to Seis De Mayo!

Fundamentals Of Scraping RSS

Most blogs automatically publish an RSS feed in either a XML or ATOM format. Here’s mine for an example. These feeds basically consist of a small snipplet of your post(usually the first 500 or so characters) as well as the Title of the post and the Source URL. This is so people can add your blog into their Feed Readers and be updated when new posts arrive. Sometimes people like to be notified on a global scale of posts related to a specific topic. So there are blog search engines that are a compilation of all the RSS feeds they know about through either their own scrapings of the web or people submitting them through their submission forms. They allow you to search through millions of RSS feeds by simply entering a keyword or two. An example of this might be to use Google Blog Search’s direct XML search for the word puppy. Here’s the link. See how it resulted in a bunch of recent posts that included the word Puppy in either the title or the post content snippet (description). These are known as RSS Aggregators. The most popular of which would be, Google Blog Search, Yahoo News Search, & Daypop.

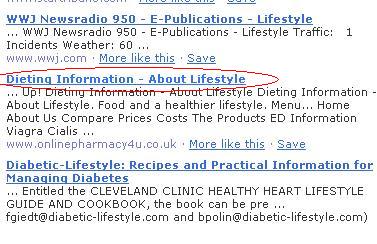

So when a black hatter in an attempt to create a massive site based on a set of keywords needs lots and lots of content one of the easiest ways would be to scrape these RSS Aggregators and use the Post Titles and Descriptions as actual pages of content. This however is a defacto form of copyright infringement since they are taking little bits of random people’s posts. The post Title’s don’t matter because they can’t be copyrighted but the actual written text can be if the person chose to have description within their feed include the entire post rather than just a snippet of it. I know it’s bullshit how Google is allowed to republish the information but no one else is, but like i said its defacto. It only matters to the beholder(which is usually a bunch of idiotic bloggers who don’t know better). So to keep in the up and up the Black Hatters always be sure to include proper credit to the original source of the post by linking to the original post as indicated in the RSS feed they grabbed. This backlink slows down the amount of complaints they have to deal with and makes their operation legitimate enough to continue stress free. At this point they are actually helping the original bloggers by not only driving traffic to their sites but giving them a free backlink, Google becomes the only real victim(boohoo). So when the many many people who use public RSS Scraper scripts such as Blog Solution and RSSGM on a mass scale start producing these sites they mass scrape thousands of posts from typically the three major RSS Aggregators listed above. They just insert their keywords in place of my “puppy” and automatically publish all the posts that result.

After that they need to get those individual pages indexed by the search engines. This is important because they want to start ranking for all these subkeywords that result from the post titles and within the post content. This results in huge traffic. Well not huge, but a small amount per RSS Scraper site they put up. This is usually done in mass scale over thousands of sites (also known as Splogs, spam blogs) which results in lots and lots of search engine traffic. They fill each page with ads (MFA, Made For Adsense Sites) and convert the click through rate on that traffic into money in their pockets. Some Black Hatters make this their entire profession. Some even create in the upwards of 5 figures worth of sites, each targeting different niches and keywords. One of the techniques they do to get these pages indexed quickly is to “ping” Blog Aggregators. Blog aggregators are nothing more than a rolling list of “recently updated blogs.” So they send a quick notification to these places by automatically filing out and submitting a form with the post title, and url to their new scraped page. A good example of the most common places they ping can be found in mass ping programs such as Ping-O-Matic. The biggest of those would probably include Weblogs. They also will do things such as comment spam on blogs and other link bombing techniques to generate lots of deep inbound links to these sites so they can outrank all the other sites going for the niche the original posts included. This is a good explanation of why Weblogs.com is so worthless now. Black Hatters can supply these sites and generate thousands of RSS Scraped posts daily. Where legitimate bloggers can only do about one post every day or so. So these Blog Aggregator sites quickly get overrun and it can easily be assumed that about 90% of the posts that show up on there are actually pointed to and from RSS Scraper Sites. This is known as the Blog N’ Ping method.

I’m going to stop the explanation right there, because I keep saying “they” and it’s starting to bug me. Fuck guys I do this to! Haha. In fact most of the readers here do it as well. We already know tens of thousands, if not more, of these posts go up everyday and give links to whatever original source is specified in the RSS Aggregators. So all we got to do is figure out how to turn those links into OUR links. Now that you know what it is at least, lets learn how to exploit it to gain hundreds of automated links an hour.

What Do We Know So Far?

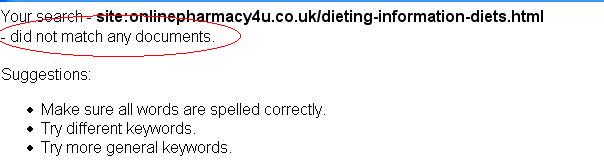

1) We know where these Splogs (RSS Scraper sites) get their content. They get them from RSS Aggregators such as Google Blog Search.

2) We know they post up the Title, Description (snippet of the original post) and a link to the Source URL on each individual page they make.

3) We know the majority of these new posts will eventually show up on popular Blog Aggregators such as Weblogs.com. We know these Blog Aggregators will post up the Title of the post and a link to the place it’s located on the Splogs.

4) We also know that somewhere within these post titles and/or descriptions are the real keywords they are targeting for their Splog.

5) Also, we know that if we republish these fake posts using these titles to the same RSS Aggregators the Black Hatters use eventually (usually within the same day) these Splogs will grab and republish our post on their sites.

6) Lastly, we know that if we put in our URL as the link to the original post the Splogs, once updated, will give us a backlink and probably post up just about any text we want them to.

We now have the makings of some serious inbound link gathering.

How To Get These Links

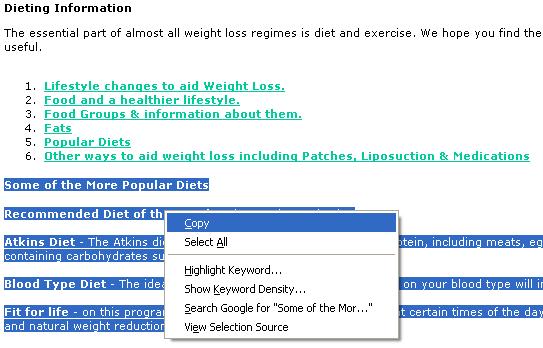

1) First we’ll go to the Blog Aggregators and make a note of all the post titles they provide us. This is done through our own little scraper.

2) We take all these post titles and store them in a database for use later.

3) Next we’ll need to create our own custom XML feed. So we’ll take 100 or so random post topics from our database and use a script to generate a .xml RSS or ATOM file. Inside that RSS file we’ll include each individual Title as our Post Title. We’ll put in our own custom description (could be a selling point for our site). Then we’ll put our actual site’s address as the Source URL. So that the RSS Scraper sites will link to us instead of someone else.

4) After that we’ll need to let the three popular RSS Aggregators listed above (Google,Yahoo,Daypop) know that our xml file exists. So, using a third script, we’ll go to their submission forms and automatically fill and submit each form with the URL to our RSS feed file(www.mydomain.com/rss1.xml). Here are the forms:

Google Blog Search

Yahoo News Search

Daypop RSS Search

Once the form is submitted than you are done! Your fake posts will now be included in the RSS Aggregators search results. Then all future Splog updates that use the RSS Aggregators to find their content will automatically pickup your fake posts and publish them. They will give you a link and drive traffic to whatever URL you specify. Want it to go to direct affiliate offers? Sure! Want your money making site to get tens of thousands of inbound links? Sure! It’s all possible from there, its just how do you want to twist it to your advantage.

I hope this cleared up the subject. Now that you know what you’re doing you are welcome to read the original post and figure out how to actually accomplish it from the technical view.

100’s Of Automated Links/Hour

![]() Enough of this common sense shit, lets do some real black hat. So the deal is I’m going to talk about desert scraping one more time and this time just be perfectly candid and disclose the actual spin I use on the technique.

Enough of this common sense shit, lets do some real black hat. So the deal is I’m going to talk about desert scraping one more time and this time just be perfectly candid and disclose the actual spin I use on the technique.![]()